In Netpicker, you can set up multiple tenants to isolate your devices and administer them in separate workspaces. Each tenant has its own devices and users can be given access to a specific tenant.

Use cases for multi-tenancy can be for example:

- Departments inside your organisation

- Datacenters which are isolated because of redundancy

- Networks in different physical locations around the world

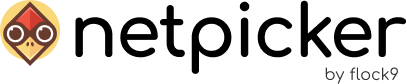

In Netpicker, you can create extra tenants by clicking on your user icon and going to ‘Tenants’:

This will display a list of your tenants and an option to create a new tenant.

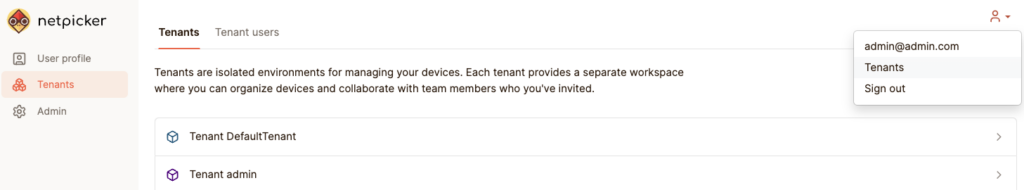

After creating a tenant, you can assign users to a tenant by going to the ‘Tenant users’ tab:

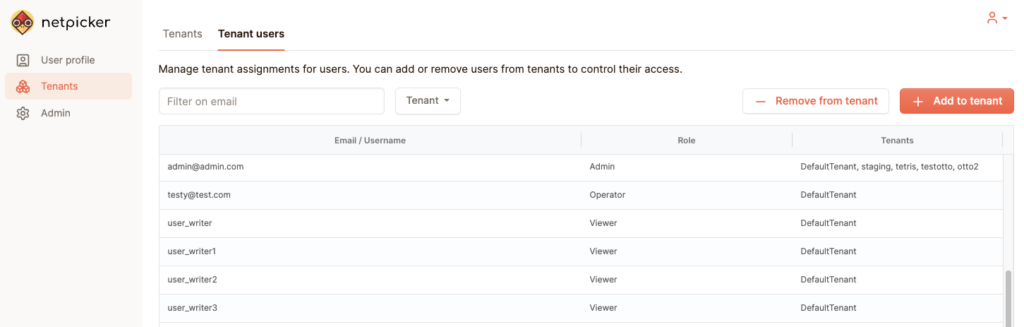

Each tenant in Netpicker has its own agent, a container that connects to the network devices in the tenant. When you navigate to your new tenant, you wil see an information modal with the AGENT_ID you need to configure:

To spin up the agent, either docker-compose.override.yml or the k8s.yml needs to be extended

by using the usual agent definition block. There are two changes here:

– the name (hostname and/or metadata/name) which in the examples below reads as agent-2

– the CLIENT_ID environment variable needs to be added, shown as <the-new-agent-id>

Upon successfully starting the new container/service, the agent should connect to the API and the red dot in the UI

should turn green.

“`

agent:

image: “netpicker/agent:latest”

hostname: agent-2

labels:

netpicker.io: service

service.netpicker.io: agent

environment:

AGENT_ID: <the-new-agent-id>

CLI_PROXY_ADDR: ‘0.0.0.0’

SHARED_SSH_TTL: 180

NO_PROXY: “api,frontend”

volumes:

– secret:/run/secrets

restart: unless-stopped

depends_on:

api:

condition: service_healthy

healthcheck:

test: “echo LST | nc -v 127.0.0.1 8765”

start_period: 12s

interval: 10s

“`

or k8s:

“`

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include “netpicker.agent.fullname” . }}-2

labels:

{{- include “netpicker.labels” . | nindent 4 }}

app.kubernetes.io/component: agent

netpicker.io: service

service.netpicker.io: agent

spec:

replicas: 1

selector:

matchLabels:

{{- include “netpicker.selectorLabels” . | nindent 6 }}

app.kubernetes.io/component: agent

template:

metadata:

labels:

{{- include “netpicker.selectorLabels” . | nindent 8 }}

app.kubernetes.io/component: agent

netpicker.io: service

service.netpicker.io: agent

spec:

hostname: agent

{{- include “netpicker.imagePullSecrets” . | nindent 6 }}

containers:

– name: agent

image: {{ include “netpicker.image” (dict “global” .Values.global “image” .Values.images.agent) }}

imagePullPolicy: {{ .Values.images.agent.pullPolicy }}

env:

– name: AGENT_ID

value: <the-new-agent-id>

– name: CLI_PROXY_ADDR

value: {{ .Values.agent.cliProxyAddr | quote }}

– name: SHARED_SSH_TTL

value: {{ .Values.agent.sharedSshTtl | quote }}

envFrom:

– configMapRef:

name: {{ include “netpicker.fullname” . }}-tag-params

ports:

– name: vault

containerPort: {{ .Values.agent.service.portVault }}

protocol: TCP

– name: proxy

containerPort: {{ .Values.agent.service.portProxy }}

protocol: TCP

volumeMounts:

– name: secret

mountPath: /run/secrets

livenessProbe:

tcpSocket:

port: 8765

initialDelaySeconds: 12

periodSeconds: 10

readinessProbe:

tcpSocket:

port: 8765

initialDelaySeconds: 12

periodSeconds: 10

resources:

{{- toYaml .Values.agent.resources | nindent 12 }}

volumes:

– name: secret

persistentVolumeClaim:

claimName: {{ include “netpicker.agent.fullname” . }}-data

securityContext:

fsGroup: 911

—

apiVersion: v1

kind: Service

metadata:

name: {{ include “netpicker.agent.fullname” . }}-2

labels:

{{- include “netpicker.labels” . | nindent 4 }}

app.kubernetes.io/component: agent

spec:

type: {{ .Values.agent.service.type }}

ports:

– port: {{ .Values.agent.service.portProxy }}

targetPort: proxy

protocol: TCP

name: proxy

– port: {{ .Values.agent.service.portVault }}

targetPort: vault

protocol: TCP

name: vault

selector:

{{- include “netpicker.selectorLabels” . | nindent 4 }}

app.kubernetes.io/component: agent

“`